That’s when the technical breakthrough you’ve been waiting for happens. I’m not directly thinking of the huge leap made when photography became largely digital and available to consumers – rather, they are epic steps up the ladder of technological evolution.

The ones that have become more important nowadays are instead the small steps that are taken which you then notice creeping up and becoming second nature. An example of this is the integration and consolidation of cameras and camcorders – where you would shoot with a DSLR camera, or an electronic viewfinder that had a certain transition period before it could fully transition from analogue to digital. Same thing before you could use the electronic shutter and bypass the mechanical shutter, or eliminate the lag in the system that made the digital act as if whatever you wanted the camera to do it had already done it when you asked it to – there wasn’t a tenth of a second later.

Anyone who’s ever stared wearily, hypnotically at a colorful beach ball when you turned on a filter in Photoshop or the seconds before Lightroom crashed to save a large file knows what I’m talking about. It simply can’t go too fast.

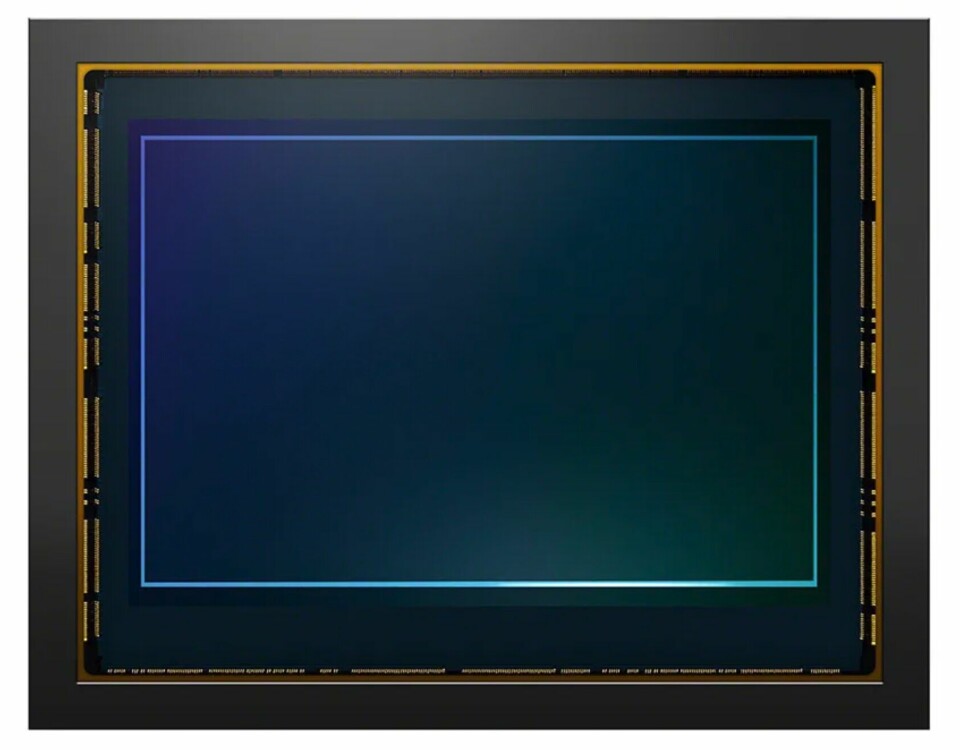

Many parts grow slowly, often to solve problems, so it’s important to be clear about where the problem is and what a possible way forward looks like. Sony manufactures its own sensors and has therefore been aware for many years of how developments appear on the capabilities of the semiconductor front, but also on how surrounding technology develops to be compatible with each other, such as transfer speeds, thermal issues and circuit development.

In this way, for several years now, Sony has already set the tone for how and when the future will look to consumers, a development that will also take time. Because when working with microstructural technology, nano-volumes and MEMS (micro-electromechanical systems), the tolerances are so small that you cannot make big leaps in development – you have to come gradually, test, improve and test again.

Sony’s new high-speed mirrorless camera, the A9 III, will now be the first full-frame mirrorless camera with a global shutter, something different from the “classic” curtain shutter that we usually call a “mechanical shutter” because it is a type of mechanical shutter arm that operates the entire shutter movement. The problem has always been that mechanical parts take time to move, so it’s better to move electrons if you want to do the whole moving process faster – something electronic circuits can do. It was not possible to make the gaps between the curtains anyway, due to a physical phenomenon called diffraction – which in this case was not very positive.

Consequently, there were limitations on how fast you could pan, which also became a limitation that extended to the number of frames per second you could shoot with a DSLR with a mechanical shutter.

Development gave us an electronic shutter that was therefore faster than a mechanical shutter, but despite the leap in development that gave us faster shutter speeds and lighter cameras (when you don’t need a mechanical shutter to stop receiving light, but can control it electronically), then some Problems in the purchasing process.

It would have been nice to avoid the mechanical shutter sound when shooting bursts (and then wonder why the electronic shutter sound should sound as boring as a bad mechanical shutter recording), but for those who need more accurate fast shutter speeds for example flash photography, or perhaps for those who Through photography and criticism, some interesting effects emerged.

The effects, which meant grainy, wavy and wobbly frames in the film shot when the camera was moved, had their origin in the fact that the electrons were certainly faster than the shutter movement, but on the other hand, they were crowded in the corridor where all the information was coming out, i.e. the sensor readout. The result was then that the path (data line) where the pixel information was to be transmitted from its sensor (the sensor element that captures the photons, the light) had to wait its turn to be read. This happens in a regular sequential CMOS sensor, which is certainly not a bad thing, but when we’re talking milliseconds, too much has happened in the outside world – and with the light – for the sensor that was last to offload its information to the image processor. The image processor wasn’t always that fast to receive all the data either. The longer you wait because it is slow, the more re-values occur, and the more different lines of image data become out of sync with each other. “Dull shutter effect” or “jelly effect” are some of the problems that arise due to the sensor not being fully read at one time, but sequentially.

I think the solution to the problem is quite clear? That’s it – just reading the sensor and all the pixels at the same time, at the exact same time. It would also mean that there would be more time left to send other data (such as autofocus information) and the ability to work faster between different image captures because different sensor elements could prepare for an extra round of data scraping instead. Studying the beach ball closely, before the last line he pushed the line away.

But is it that easy? Yes and no. The solution is very simple, but the path to get there is not that easy. Sony explains that developing a sensor with a simultaneous readout of the entire sensor took time. At the same time, they also solved a major problem that paves the way for many opportunities in the future. What this solves the problem for you as the consumer is basically three things: the ability to shoot at a higher number of frames per second, the ability to have very fast shutter speeds, and also very fast shutter speeds with flash (which is not possible with an electronic viewfinder) and It is demonstrated in the row-reading sensor, simply put), in addition to the distortions I touched on above – the ability to photograph a bobsled by panning close-up without distorting the image. Good news then.

Sony’s solution was to simply rebuild the sensor design from using one sensor element or photodiode, to using two. This process works so that the first sensor captures light at exactly the same time and for the same length as all the other sensors, but it then transmits the information it has to a second photodiode that stores the data that will form the pixel. The first photodiode can start working again, while the second photodiode can wait its turn to scoop data.

The architecture contributes to the increased speed and new possibilities we’ve experienced, but of course there are always drawbacks. Among other things, it becomes more crowded in all the sensor elements, which means that there is not much space left for anything other than the photodiodes. This space was previously used to create a dual-circuit solution to be able to use two different base ISOs for shooting at different ISO settings and improve image quality when using particularly high ISO values.

Sony chose to create a two-layer design for its new sensor, which means that these electronics simply cannot be used here – and as a result this improvement is not available on the Sony A9 III. The other thing is that the dual photodiode solution in the same circuit affects the charging capacity of the receiving photodiode – which in turn pushes the base ISO value to 250 in the case of the Sony A9 III – versus the ISO 100 that is relatively common today. This means a one-step reduction in noise performance, something the average photographer basically doesn’t think about – and not a problem at all for someone who shoots sports and still moves up the ISO scale to get the shot.

However, capturing 120 full-size raw format images per second with autofocus following without interruption or focus problems also creates some questions for the user. If you’re not shooting, you’re not interested in solving the rolling shutter issue, and if you’re shooting sports, 120fps is still plenty of max. Faster advances may have a point in shooting at more frames per second than other cameras can handle today. But what is the difference between 50, 100 and 200 frames per second in reality? Maybe not much. And you probably can’t handle going through bursts of 190 rounds in a single, 1.6-second shot (which is the A9 III’s buffer). And when you look at them, the difference between the ten in the same row is minimal.

But if you shoot quick sequences with a flash that you want to lock in a certain way — or in a certain light — there are new possibilities thanks to pan-shutter technology. Specifically, it is possible to adjust or tilt “just in time,” when flash sync should be triggered to expose the camera at these quick times. At that time, you can compensate for the fact that the flash does not fire at exactly the same time as the exposure, as the flash also has a certain delay from firing until the light finishes on the subject.

But for those who want to capture the action in high resolution, creating a slow-motion movie with the option to crop or stabilize the image afterwards, it’s clearly worth something.

But even so, the old adage remains that it’s important to master the technique, and be there for the shot. The uniqueness isn’t whether you shoot it single-shot or burst – but you obviously get a much greater range to find exactly the right shot at exactly the right moment – if you shoot at 120fps rather than just taking a photo.

The only question is how much pixel peep you can afford – and how much storage space are you willing to give up. By the way, I read that Google Photos now starts with “collections” or “stacks” or otherwise called “stacking” similar photos in its app. The next step would also be for photographers to take it one step further, or perhaps allow AI to choose the best photo for you from a group of almost identical photos – in a split second.

Because if I were to do it myself, I’m afraid the beach ball would still be a constant presence.

“Entrepreneur. Freelance introvert. Creator. Passionate reader. Certified beer ninja. Food nerd.”

More Stories

Logitech Steering Wheel News: New Steering Wheels, Gear Lever, and Handbrake in Direct Drive Series

Garmin Launches inReach Messenger Plus App

Why Rare Earth Metals for Electric Cars Are Crucial for Modern Mobility