Google offers a bunch of practical examples. If a user looks at an image on a T-shirt, you can tap on the lens icon and ask Google to show you other clothes with similar styles. Describing the style through a text search can be difficult, but by searching a combination of images and text, users should get more useful results.

The search page should also be redesigned with the help of MUM. For example, if a user searches for “acrylic panels”, Google understands how users usually explore the topic, and which aspects they want to see first. The code recognizes context and can find more than 350 related topics.

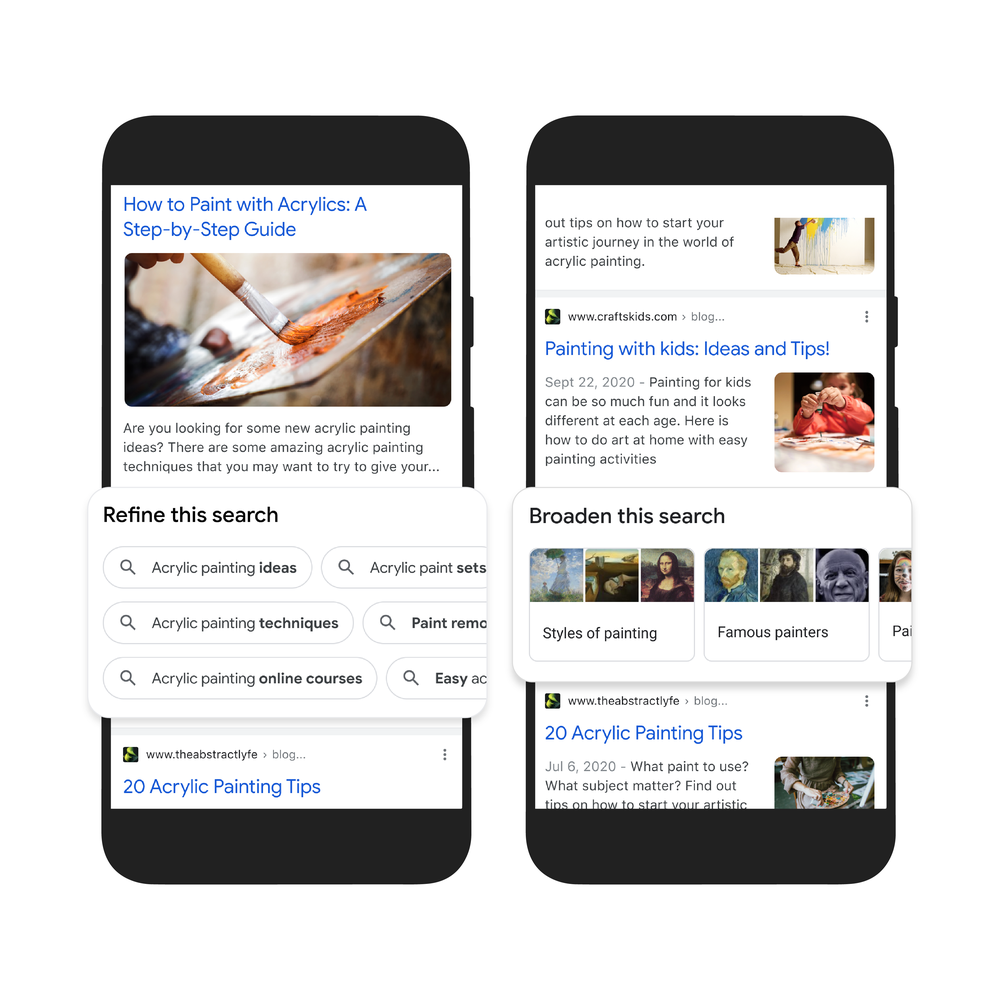

If you search for “acrylic painting,” Google understands how people typically explore the topic, and shows which aspects people are likely to look at first. For example, we can identify more than 350 topics related to acrylic painting, and help you find the right path to follow.

Google will introduce a new, more visually rich results page for a certain type of search, containing images, videos, and articles. The new results page is for those times when the user is looking for inspiration.

Google discusses the latest news related to videos. The code should be able to identify topics related to the videos, with flexible links so that users can delve into the topics and search for more. Thanks to MUM, Google can find related topics even though they are not explicitly mentioned in the video.

Many news will be published only “in the coming months”.

“Entrepreneur. Freelance introvert. Creator. Passionate reader. Certified beer ninja. Food nerd.”

More Stories

Logitech Steering Wheel News: New Steering Wheels, Gear Lever, and Handbrake in Direct Drive Series

Garmin Launches inReach Messenger Plus App

Why Rare Earth Metals for Electric Cars Are Crucial for Modern Mobility